Your observability costs are high because you're processing logs in the wrong place. Most platforms charge you to ingest everything first, then let you filter what you actually need. You've already paid to transmit, ingest, and index logs before you even start processing them. Processing logs before they hit expensive platforms avoids these costs.

Key Takeaways:

- First mile processing analyzes logs before they reach expensive observability platforms

- Reduce observability costs by up to 90% without changing your existing workflows

- Log shippers can intelligently route high-signal data to premium platforms while sending routine logs to cheap storage

- All raw data is preserved in S3, ensuring compliance and debugging capabilities remain intact

What is the First Mile?

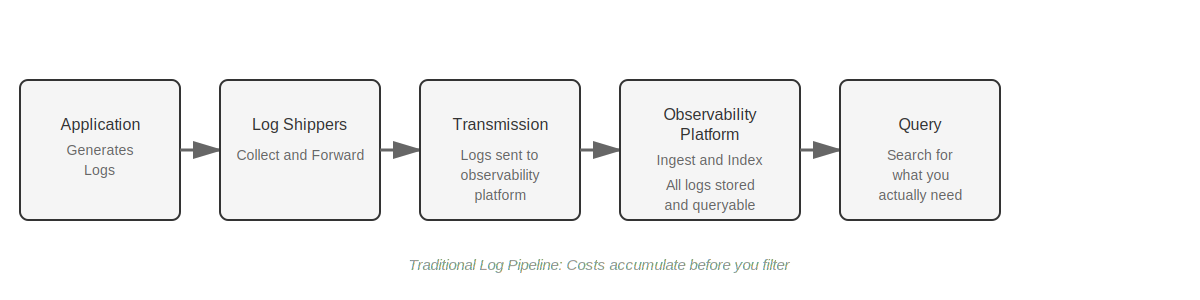

The first mile is the moment logs leave your applications and begin their journey to observability platforms. For most companies, this looks like a straightforward pipeline: applications generate logs, log shippers collect them, those logs get transmitted to your observability platform, the platform ingests and indexes them (and you start paying), and then finally you query what you need.

The first mile is that middle step, after generation but before ingestion, where log shippers collect and forward data. Most companies configure log shippers to simply forward everything without any processing or filtering, as if the only job is to get the logs to the platform as fast as possible, and this approach is exactly why costs spiral out of control.

Why First Mile Processing Matters

Once logs reach your observability platform, you're paying for them. Splunk charges on ingestion, Sumo Logic charges on scanning, Datadog charges on indexed volume. The pricing models differ but the outcome is the same: you pay for logs you'll never use.

Processing at the first mile means making cost-saving decisions before ingestion:

- Filter noise from signal before paying premium indexing costs

- Identify duplicate log entries that don't need to be stored multiple times

- Route high-value logs to fast query platforms while sending routine logs to cheap object storage

- Reduce data transfer costs by processing logs closer to the source

When you make these decisions after ingestion, you're paying for mistakes, but make them before ingestion and you only pay for what matters.

What Actually Happens at the First Mile

Let's follow a log entry through a typical setup to see where the costs accumulate. Your application logs an event, maybe a successful API request, maybe an error, maybe a health check, and the log shipper collects it without any intelligence applied, just forwarding everything downstream. The platform ingests it and you're now paying, then it indexes the log to make it searchable in milliseconds and you're paying even more. Later, when you query for errors, that successful API request and health check get scanned as part of the time window, adding to your costs yet again. You never needed those logs but you paid for them at every step.

How Grepr Reduces Log Costs at the First Mile

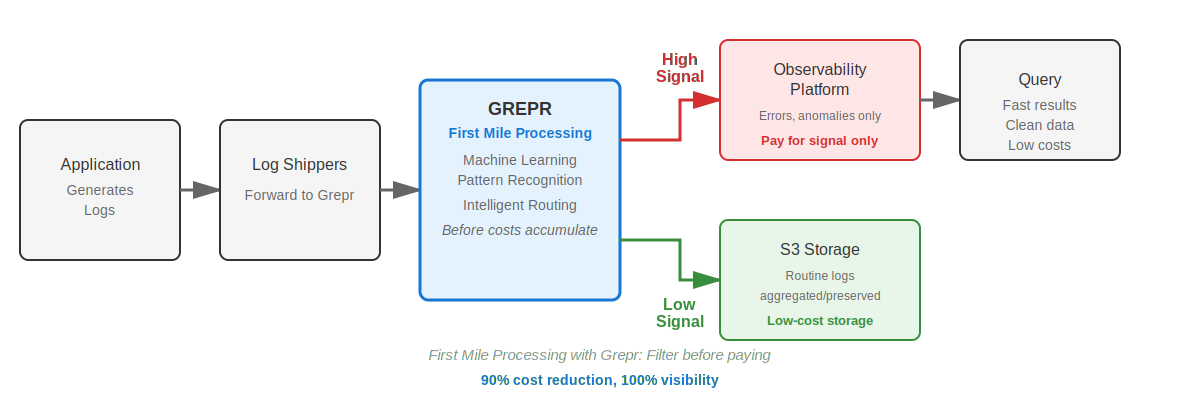

Log shippers don't have to simply forward everything because they can make intelligent routing decisions, and when Grepr sits at the first mile, the entire flow changes. Your application still logs events the same way, but the log shipper forwards them to Grepr instead of directly to your observability platform.

Grepr processes logs in real-time using machine learning to identify whether each log contains high-value signal or routine noise. Errors, anomalies, and unusual patterns are recognized as high-signal events worth preserving for analysis, while repetitive routine operations are identified as low-signal candidates for aggregation. High-signal logs route directly to your observability platform while low-signal logs get aggregated into summaries and sent to cheap storage like S3.

You pay to index what matters while everything else costs pennies in object storage. When your observability platform only gets meaningful logs, you'll notice the difference. Queries come back faster. Dashboards make more sense. Your bill shrinks.

See how Jitsu achieved these results with first mile processing, cutting their Datadog costs by 90% while managing millions of shipments that each generated 400 logs.

Try First Mile Processing Risk-Free

Processing logs at the first mile before they reach your observability platform is the difference between paying for noise and paying only for signal. With Grepr, you can see this cost reduction in action without changing your applications, dashboards, or workflows.

Try Grepr free and watch your log volumes drop by 90% while your visibility stays at 100%. No migration required, just redirect your log shippers and start saving.

Frequently Asked Questions

How much can first mile log processing reduce observability costs?

First mile processing with Grepr typically reduces observability platform costs by 90-99% by filtering routine logs before ingestion. You only pay premium indexing costs for high-signal events like errors and anomalies, while routine operational logs route to low-cost S3 storage at pennies per gigabyte. Companies like FOSSA have seen similar results, cutting their Datadog costs by over 90% with no workflow changes.

Does first mile processing work with my existing log management tools?

Yes, first mile processing works with Datadog, Splunk, New Relic, Sumo Logic, and other observability platforms. Your log shippers redirect to Grepr for processing, then Grepr forwards filtered logs to your existing tools. Your engineers keep using the same dashboards, queries, and workflows they already know. Learn how this works with Grafana Cloud, where Grepr cuts log costs by up to 90% through simple configuration changes.

What happens to logs that don't get sent to my observability platform?

All logs are preserved in S3 or other low-cost object storage, so you meet compliance requirements without paying premium indexing costs. Low-signal logs stay accessible for audits or when you need to investigate something later. When incidents occur, Grepr can surface full raw logs on demand and backfill relevant historical data from storage to your observability platform for detailed analysis.

How long does it take to implement first mile log processing?

Implementation takes about 30 minutes. You redirect your log shippers to Grepr instead of directly to your observability platform. No application code changes, no data migration, no changes to how engineers query and analyze logs. Grepr works out-of-the-box to start reducing spend immediately, and makes it so that getting it to work exactly as you need is more of a reactive tuning process rather than starting with a blank slate.

More blog posts

All blog posts

Why Automated Context Is the Real Future of Observability

The Ferrari Problem in AI Infrastructure (and Why It Applies to Your Observability Bill Too)