Why observability needs an upgrade

Cloud-native architectures generate enormous amounts of telemetry. Every new deployment and microservice multiplies logs, metrics, and traces. The result is too much data, too little clarity, and rising costs. Anyone who has received a Datadog bill knows this to be true.

Observability pipelines help fix that problem. They reshape and route telemetry before it reaches expensive tools. They aim to turn large volumes of telemetry into useful insights, but most pipelines fall short. The process is often too manual and complex, leaving engineers with limited value from their data.

Observability vs monitoring

Observability provides visibility into what’s happening across a system. It allows you to know when something breaks and why. Monitoring depends on observability to detect and analyze those events.

At scale, the difference matters because containers restart without warning, dependencies fail in unexpected ways, latency spreads across layers, and predefined dashboards can no longer keep up.

What an observability pipeline does

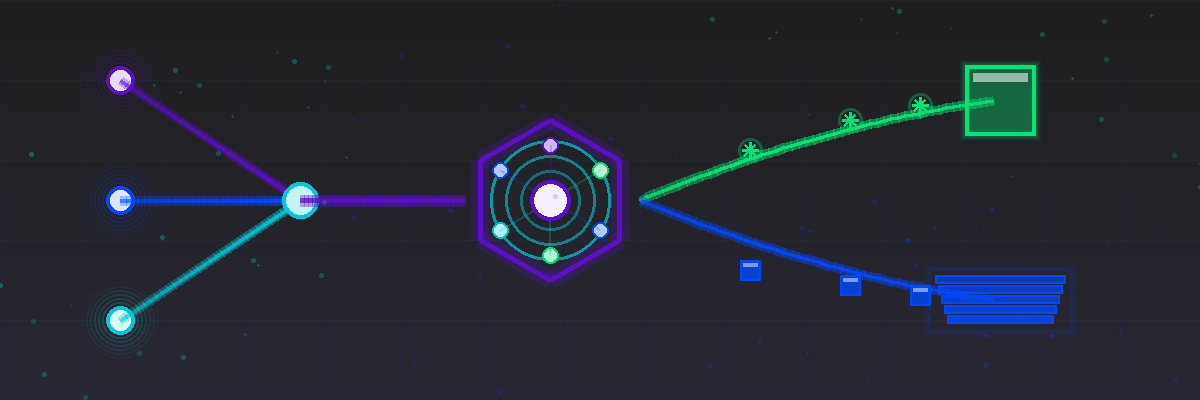

An observability pipeline connects telemetry sources to observability tools. It helps teams collect, process, and send data efficiently.

1. Collection

Gathers logs, metrics, and traces from services, agents, and runtime environments.

2. Transformation

Cleans, structures, and enriches data with context like service name, build ID, or user session.

3. Routing

Delivers the right data to the right destination. High-value data goes to analysis tools. Long-term data moves to affordable storage.

The outcome is cleaner data, faster queries, and clearer answers.

The Importance of High-Cardinality Data

High-cardinality data such as user IDs or request IDs shows what is really happening in production. Averages hide anomalies. Detail reveals them.

Storing every detail can get expensive. Pipelines help decide what to keep, what to summarize, and where to store it so you maintain accuracy without overspending.

Engineering for Observability

Building observability is an engineering challenge. The best systems follow a few key practices:

- Use structured, context-rich events instead of unstructured logs

- Keep consistent schemas across services

- Retain full detail where it improves decisions

- Strip sensitive data for compliance

- Review what data actually helps detect and resolve issues

Teams that build and maintain observability pipelines recover faster and operate more reliably.

How Grepr Changes The Game

Grepr is an observability data platform that includes a built-in pipeline. The pipeline connects agents and observability tools, while the platform manages processing, storage, and optimization to give engineers full visibility and control over their data.

Grepr processes all traces, logs, and metrics, filters what matters, and routes data intelligently. It reduces observability costs by more than 90% while keeping full visibility, lowering storage and ingestion needs, and maintaining compliance.

More blog posts

All blog posts

Privacy and Data Ownership in Observability Pipelines

Observability Cost Control: How Grepr and Edge Delta Take Different Paths to the Same Goal