The term “grok” was first used in the science fiction novel “Stranger in a Strange Land” by Robert Heinlein. In the book it was used to mean instant understanding.

Using structured logging for all application components is the ultimate goal to provide streamlined log management. For new components, this is easy with modern frameworks that support structured logging. However, the reality is that many organizations will have legacy components that are non-trivial to adapt to use structured logging. Grepr does a good job of automatically recognizing fields in unstructured logs. However, inevitably, some fields will not be automatically detected.

For those instances where the automatic field detection of Grepr misses an important field in an unstructured log message, manual intervention using Grok is possible. Grok is a pattern-matching and field extraction syntax based on regular expressions. Grepr supports the predefined patterns that Logstash supports. A Grok match and extract rule takes the form:

%{PATTERN_NAME:field_name}

The field_name is optional; if not specified, only the pattern match will be performed. Multiple Grok statements may be used to match and extract multiple fields from an unstructured log line. With an example log line:

2025-07-06 14:23:36 ERROR [checkout] Security handshake failed

Using Grok to match and extract all the fields:

%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:severity} \[%{WORD:service}\] %{GREEDYDATA:message}

Any characters outside of the Grok statements are treated as literals such as the spaces and the square brackets in the example message. The square brackets are escaped with a backslash as they have special meaning in regular expressions.

To check the syntax and results of a Grok statement, use an online tool such as Grok Debugger.

Using With Grepr

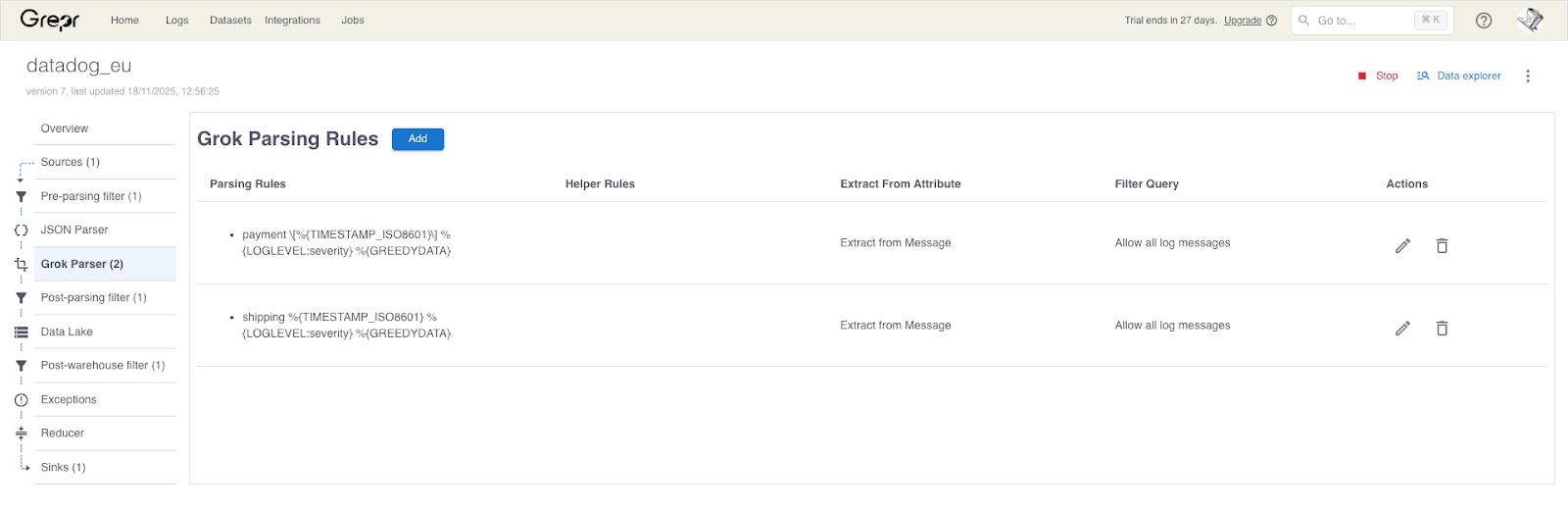

Grok Parser is part of the Grepr Intelligent Observability Data Engine. A new pipeline will not have any entries in the Grok Parser. To create a new Grok rule for a Grepr pipeline, go to the details of the pipeline on the Grepr web dashboard and select Grok Parser from the left-hand menu. Click the Add button to open the form.

The form has a built-in Grok tester. Copy and paste an example log message from the application component, then enter the Grok pattern. Check that it matches and that the fields and/or attributes are extracted as expected.

In this example, a legacy Java application logs unstructured data, with the timestamp followed by the severity and, finally, the message. Grepr is not automatically picking up the message severity, using the Grok parser the severity can be correctly extracted.

The Grepr syntax is slightly different: The Grok expression must have a label at the start, “shipping” in the example above. Additionally, note that only the LOGLEVEL pattern has an extraction field; the other data in this instance is correctly handled automatically by Grepr. The Grok pattern must match the entire log line. The query applies the Grok rule only to log entries where the service attribute is “rs-shipping”.

One of Grepr’s primary design goals is to automate configuration as much as possible. However, there will always be some situations where this is not possible. In these circumstances, Grepr provides the means to easily configure a manual override, thus ensuring data fidelity at all times.

Frequently Asked Questions

What is Grok and how does it help with unstructured logs in Grepr?

Grok is a pattern matching and field extraction syntax that helps teams pull key details out of unstructured logs. When Grepr’s automatic field detection misses something, Grok rules fill the gap and ensure logs remain searchable and accurate.

When should I use a Grok rule in Grepr?

Use a Grok rule when a legacy or unstructured log line contains an important field that Grepr does not detect on its own. Grok lets you define exactly what to extract so the pipeline captures the fields you need.

Does Grok support the same patterns used in Logstash?

Yes. Grepr supports the same predefined Grok patterns available in Logstash, which makes it easy for teams already familiar with Grok to apply existing knowledge.

How do I test a Grok pattern before applying it in a pipeline?

Grepr provides a built-in Grok tester in the pipeline editor. Paste a sample log line and your Grok pattern into the form and verify that it extracts the expected fields before saving the rule.

Do Grok rules replace Grepr’s automatic parsing?

No. Grok rules act as overrides only when automatic parsing falls short. Grepr still handles all other fields automatically, and Grok ensures accuracy for the fields that require manual extraction.

More blog posts

All blog posts

Why Automated Context Is the Real Future of Observability

The Ferrari Problem in AI Infrastructure (and Why It Applies to Your Observability Bill Too)