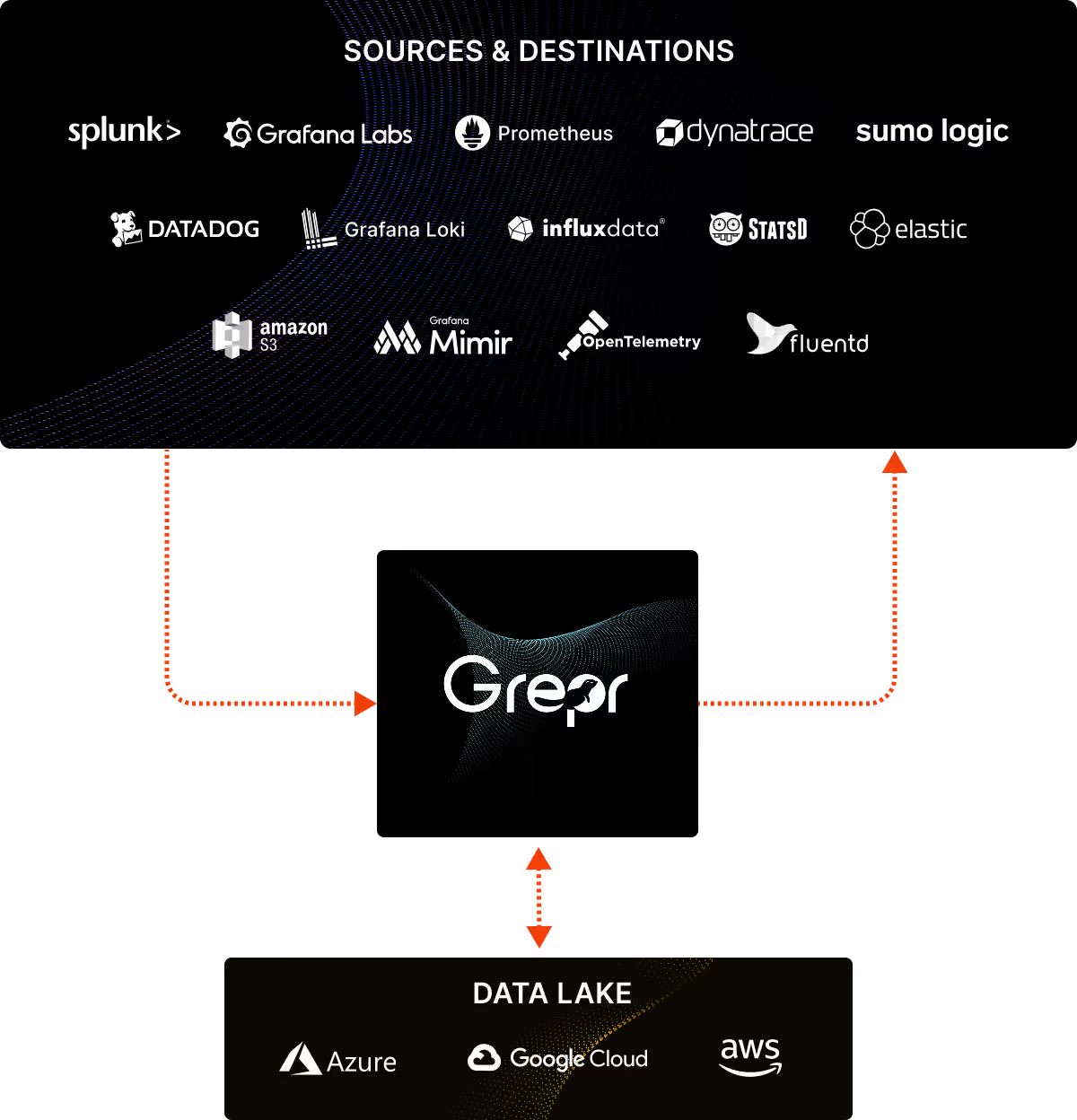

Grepr slips in like a shim between the log shippers and the aggregation backend. With a small configuration change log shippers forward the logs to Grepr instead of the usual aggregation backend. Grepr automatically analyses each log entry and identifies similarity across all messages in real time. Noisy messages are summarised while unique messages are passed straight through. Nothing is discarded, all messages received by Grepr are persisted in low cost storage.

Semantic Pipeline

As the log messages arrive in Grepr they are processed by a pipeline which parses the messages into the Grepr internal structure. Each log message has the following structure:

- ID: Globally unique identifier

- Received Timestamp: When Grepr received the message

- Event Timestamp: The timestamp from the log message

- Tags: A set of key-value pairs used to filter and route messages e.g. host, service, environment, etc.

- Attributes: Structured data and fields extracted from the message.

- Message: The text of the message.

- Severity: The Open Telemetry standard for message severity. 1-4 TRACE, 5-8 DEBUG, 9-12 INFO, 13-16 WARN, 17-20 ERROR and 21-24 FATAL. Either derived from a message filed or parsed out of the message.

Masking

Once the message is in a standard form the real work can begin. Masking automatically identifies and masks out frequently changing values such as numbers, UUID, timestamps, IP addresses, etc. This significantly improves the efficiency od our machine learning by normalising variable data into consistent patterns.

Clustering

Using sophisticated similarity metrics to group messages into patterns. The similarity threshold determines how closely messages must match to be considered part of the same pattern.

Sampling

Once a pattern reaches a threshold, Grepr will either stop forwarding those messages matching the pattern or only forward a sampled subset of the matching messages. If the pattern has been configured to be sampled, then Grepr uses a logarithmic sampling algorithm. With the base set to 2 and the deduplication threshold set to 4, then Grepr will send an additional sample message once the number of messages reaches 32. Since 4 messages were already sent before the threshold was reached and 2^4 = 16 so the next threshold is 32 and 64, 128, 256, you get the idea.

Summarising

At the end of each time slot, Grepr will generate a concise summary for each clustered pattern including the following extra attributes:

- grepr.patternId: Unique identifier for the pattern

- grepr.rawLogsUrl: Direct link to view all raw messages for this pattern

- grepr.repeatCount: Count of the number of messages aggregated

Exceptions

The machine learning in the semantic pipeline does a very good job of significantly reducing the volume of log data sent through to the aggregation backend without filtering out any essential data. However, there are always exceptions. Fortunately there is a rules engine that works beside the machine learning that allows for the configuration and fine tuning of which messages are filtered and which are allowed to pass straight through.

All The Data When You Need It

When using Grepr to automatically manage log data, it’s like having an engineer look at each message and decide which ones are useful and which ones may not be useful. Most log messages are only useful when investigating an issue, only a small subset of messages are useful to verify that everything is working as it should be. Why pay to have every message indexed and stored by your log aggregation backend? With Grepr you can keep all messages in low cost storage where they can be queried for reporting or to feed AI analysis. When an incident occurs, the relevant log messages can be quickly backfilled into the log aggregation backend to aid in the restoration of service. With this strategy, you get the benefits of automated log reduction without changing any of the configurations, analytics or dashboarding your team has built on the logging tools.

FAQ

1. What is a semantic pipeline in the context of log management?

Grepr's semantic pipeline is the sequence of processing stages every log message passes through when it arrives, including parsing, masking, clustering, sampling, and summarization. Together these stages identify which messages are unique and important, which are repetitive noise, and how to represent both accurately to your logging backend at a fraction of the original volume.

2. How does Grepr decide which log messages to forward and which to summarize?

Grepr uses similarity metrics to group incoming messages into patterns. Once a pattern reaches a configured threshold of repetition, Grepr stops forwarding every instance and instead forwards either a sampled subset using a logarithmic algorithm, or a concise summary at the end of each time window. Unique messages and errors always pass straight through.

3. What is masking and why does it matter for log reduction?

Masking is the step where Grepr automatically identifies and normalizes frequently changing values in log messages, such as numbers, UUIDs, timestamps, and IP addresses, replacing them with consistent placeholders. This allows the clustering stage to accurately recognize messages as belonging to the same pattern even when variable data differs between instances, which significantly improves the efficiency of the machine learning.

4. How does the logarithmic sampling algorithm work?

When a log pattern is configured for sampling rather than full suppression, Grepr uses a logarithmic scale to determine how often to forward a sample. With a base of 2 and a deduplication threshold of 4, Grepr forwards the first 4 messages, then sends one additional sample each time the count hits the next power of 2: 32, 64, 128, 256, and so on. This ensures you have representative samples at every order of magnitude without forwarding every duplicate.

5. What happens if the machine learning miscategorizes an important log message?

Grepr includes a rules engine that operates alongside the semantic pipeline. This allows teams to configure explicit exceptions, ensuring that specific message types, services, or patterns always pass through to the logging backend regardless of what the machine learning determines. The rules engine gives teams fine-grained control to tune behavior without replacing the automation.

More blog posts

All blog posts

The Observability Debt: Why More Data is Making Us Less Reliable

Regain Control of Your Datadog Spend