Observability tools are fantastic, it would be very difficult to troubleshoot issues without them. However, with their utility comes a cost of operation directly proportional to the volume of data they process and store. When troubleshooting issues the more information that is available, the easier it is to find what’s causing the issue; this convenience comes at a price.

Businesses are continually looking for ways to be more efficient; achieve more with less. One area that is frequently highlighted is the monthly observability platform bill. The challenge here is the disconnect between those who use the observability platform, the DevOps teams, and those who pay the bill, the business. The DevOps teams want more information and are not immediately aware of the cost implications. The business wants a smaller bill and are not immediately aware of the operational implications. With both parties pulling in opposite directions, something has got to give.

With apologies to Abraham Lincoln the rule for observability is. “Some observability data is useful all of the time. All observability data is useful some of the time. Not all observability data is useful all of the time.”

The predicament is that observability platforms do not make any allowance for this rule, they behave like all of the data is equally important and charge accordingly. This appears to be an impossible situation to escape from. Or is it?

Break The Conundrum

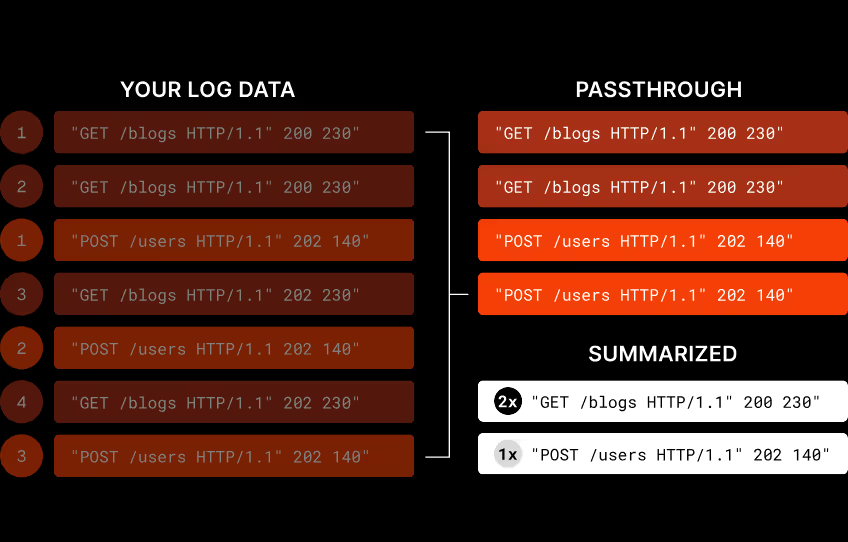

Grepr understands that some observability data is more important than others. It slips in like a shim between log shippers and the aggregation and storage backend. Using Grepr’s semantic machine learning engine, frequent noisy messages are summarised before being forwarded to the backend. Less frequent messages are passed straight through.

This approach reduces log volume by 90-98% resulting in proportional cost savings. Under normal operations this covers the “some of the data is useful all of the time” part of the rule.

All data sent to Grepr is retained in low cost storage and is available via the Grepr dashboard and REST API for searching using well known query syntaxes: Splunk, Datadog & New Relic. The results of a query can optionally be used to create a backfill job that will populate the backend with the data that was previously summarised. This takes care of the “all of the data is useful some of the time” part of the rule.

Only What You Need

Grepr provides an escape route from the impossible situation of reducing observability costs without negatively affecting operational capability. The DevOps teams maintain a high level of detail and do not have to drop data. The business obtains a smaller monthly bill for the observability platform. All parties are happy and the conundrum is solved.

By shipping only 2-10% of the log data to the observability platform there will be a proportional reduction in the monthly usage bill. The 98% retained by Grepr is not lost, it is immediately available for queries and can be selectively backfilled on demand when required for incident investigation.

“Not all observability data is useful all of the time” so why pay for it in full all of the time? Using Grepr you will only pay for the data you need when you need it.

More blog posts

All blog posts

Grepr Live View: Test Pipeline Changes with Production Data

.png)

Grepr Recognized by Gartner as a Cool Vendor for AI Driven Operations