Over the past five years, the rise of Kubernetes and the explosion of microservices have led to an unprecedented increase in the volume of logs generated by applications. Each application today could consist of tens or hundreds of microservices, each of which could have tens of instances running in containers emitting hundreds of log messages per second. Many engineering leaders are driving their teams to reduce observability costs, starting with logs, but often, this is an enormous undertaking that needs to be repeated every few years.

In this blog, I'll highlight four basic techniques for reducing log volumes, comparing their pros and cons. These basic techniques should be available in most log aggregation systems as well as observability pipelines, and while they're simple and unlikely to work sufficiently for an enterprise, they're a good starting point. In the next blog post, I’ll talk about three advanced techniques, and discuss their availability across common tools.

Basic Technique 1: Increase severity threshold

One of the simplest techniques to implement is increasing the severity threshold of either logs emitted at the source or accepted at the log aggregation system. For example, you can increase the threshold from INFO to WARN level, and no longer collect INFO logs.

Changing the log severity emitted at the source can require code changes and can be harder to revert quickly. On the other hand, since the logs are no longer emitted at the source, you would save any costs needed to transmit and collect the logs.

Changing the log severity at the log aggregator would still accrue the cost of delivering the logs. However, you can then change the level of logs indexed/stored at the log aggregator itself, without code changes. It would be easier to revert that change if needed, such as during an incident.

Pros:

- Easy to implement: significant reduction with minimal changes

- Customizable: Can be configured at a fine granularity at the source.

Cons:

- Requires discipline: log messages need to be labeled with the correct level.

- Data gaps: can miss relevant logs necessary for troubleshooting or alerting

- Migration impacts: Some alerts and dashboards may stop working, and might not be fixable since data is lost.

Basic Technique 2: Logs to metrics

Often, counts of various log messages are used by developers to measure throughput, delay, errors, or other metrics. This is an antipattern since logs are more expensive to process than metrics. Some log aggregation systems and observability pipeline tools allow users to convert log messages to metrics.

Pros:

- Targeted: since specific messages are targeted for conversion to metrics, this technique reduces undesired side effects.

- Certain details preserved: specifically, aggregated metrics.

Cons:

- Other details lost: while metrics are preserved, other information (such as user id) are lost.

- Implementation effort: Hard to find candidate log messages and then rewrite dashboards and alerts.

- Lower impact: most log messages are not used as metrics, so this technique has limited implementation scope.

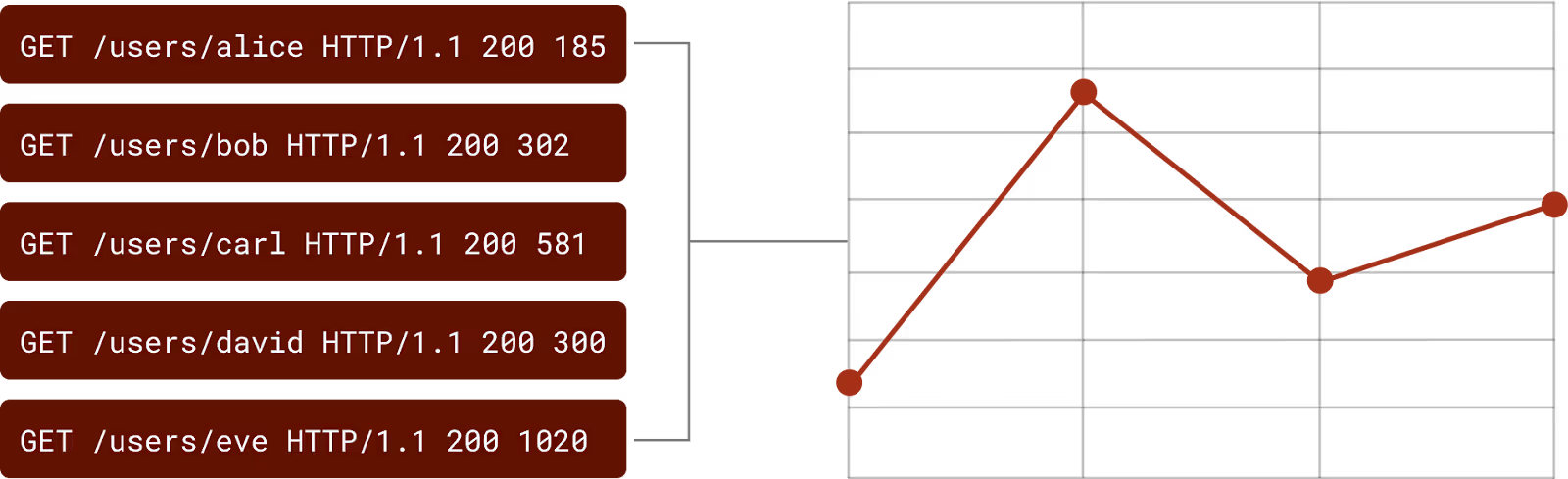

Basic Technique 3: Uniform Sampling

A powerful tool that has been recently getting wider adoption is sampling. With sampling, you collect a randomly sampled percentage of messages, say 1 out of every 100. You could apply different sampling ratios at each message severity so that higher severity messages get less sampled or not sampled at all. In Part 2 of this series, we'll talk about Logarithmic sampling, an advanced technique.

Pros:

- Powerful reduction: can reduce log volumes very quickly.

- Maintains statistical profiles: Since log messages are sampled uniformly, all message patterns are reduced proportionately, maintaining their statistical shapes.

- Customizable: Depending on how sampling is implemented, you can avoid sampling certain services’ messages and sample others more heavily.

Cons:

- Data gaps: can drop rare but important messages that can be necessary for troubleshooting.

- Requires a sampler: Most log aggregators have sampling capabilities, but this is an extra feature to check for. You might need an observability pipeline to implement it.

- Alerting and dashboarding impacts: Sampling changes the absolute counts of any messages the log aggregator is receiving. Alerts or dashboards that depend on those exact values may need to be updated.

- Implementation effort: setting up all the exclusions so as not to miss important low-volume messages could be a significant effort.

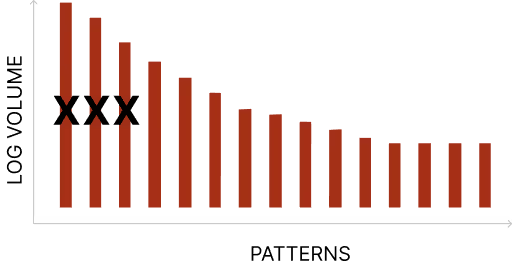

Technique 4: Drop rules

Most modern log aggregators allow a user to count messages by pattern. This can be then used to configure patterns to drop either by the agent collecting the logs at the source or by the log aggregation system when the log messages arrive.

Pros:

- Works well in smaller environments: where the number of patterns is relatively small and doesn’t change often.

- Targeted: Since the patterns to exclude are picked manually, undesired side effects can be minimal.

Cons:

- Implementation effort: In larger environments where there may be thousands of high volume patterns, understanding which are safe to remove and which aren’t and manually configuring a rule for each would be too much effort.

- Requires continuous maintenance: in dynamic environments, the log patterns may change all the time, which would require continuous maintenance.

- Loses data: dropped log messages may hold information that’s needed for troubleshooting even though they’re high volume.

- Allows spurious spikes: A bad deployment or a simple bug may trigger a slew of new messages that wouldn’t be stopped.

Many tools support all these basic techniques, just like Grepr. In the next blog post, I'll cover some more advanced techniques that allow Grepr to push volume reduction to 90% or more, with minimal impact to existing workflows. If you'd like to see what Grepr can do for you, schedule a demo here or sign up for a free trial here!

More blog posts

All blog posts

You're Paying for Data You'll Never Use

5 Signs Your Observability Pipeline is Costing You Too Much