Grepr is the new kid on the block while Cribl is the established veteran. Let’s look at the similarities and differences between the two and provide some insight into which would be the best fit for your organisation.

Apart from a penchant for dropping vowels; Mark Twain had some thoughts on this. What are the other similarities and differences between Grepr and Cribl?

A very superficial inspection of the two reveals that they are broadly similar. Both offer configurable data pipelines for observability data with various sources and sinks. Cribl being the established player, currently has a broader selection of sources and sinks, however, Grepr already covers the usual suspects and is regularly adding new integrations.

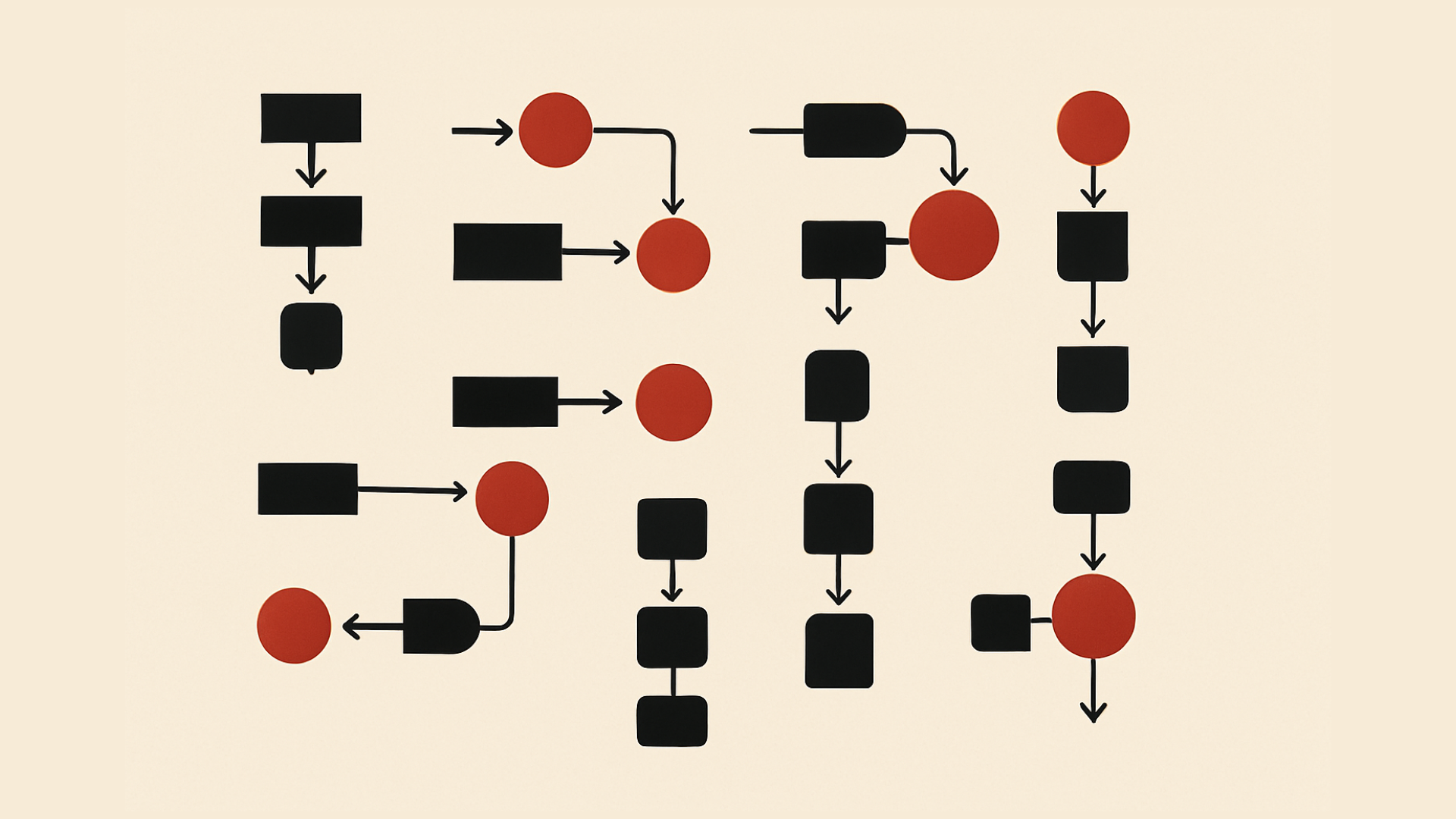

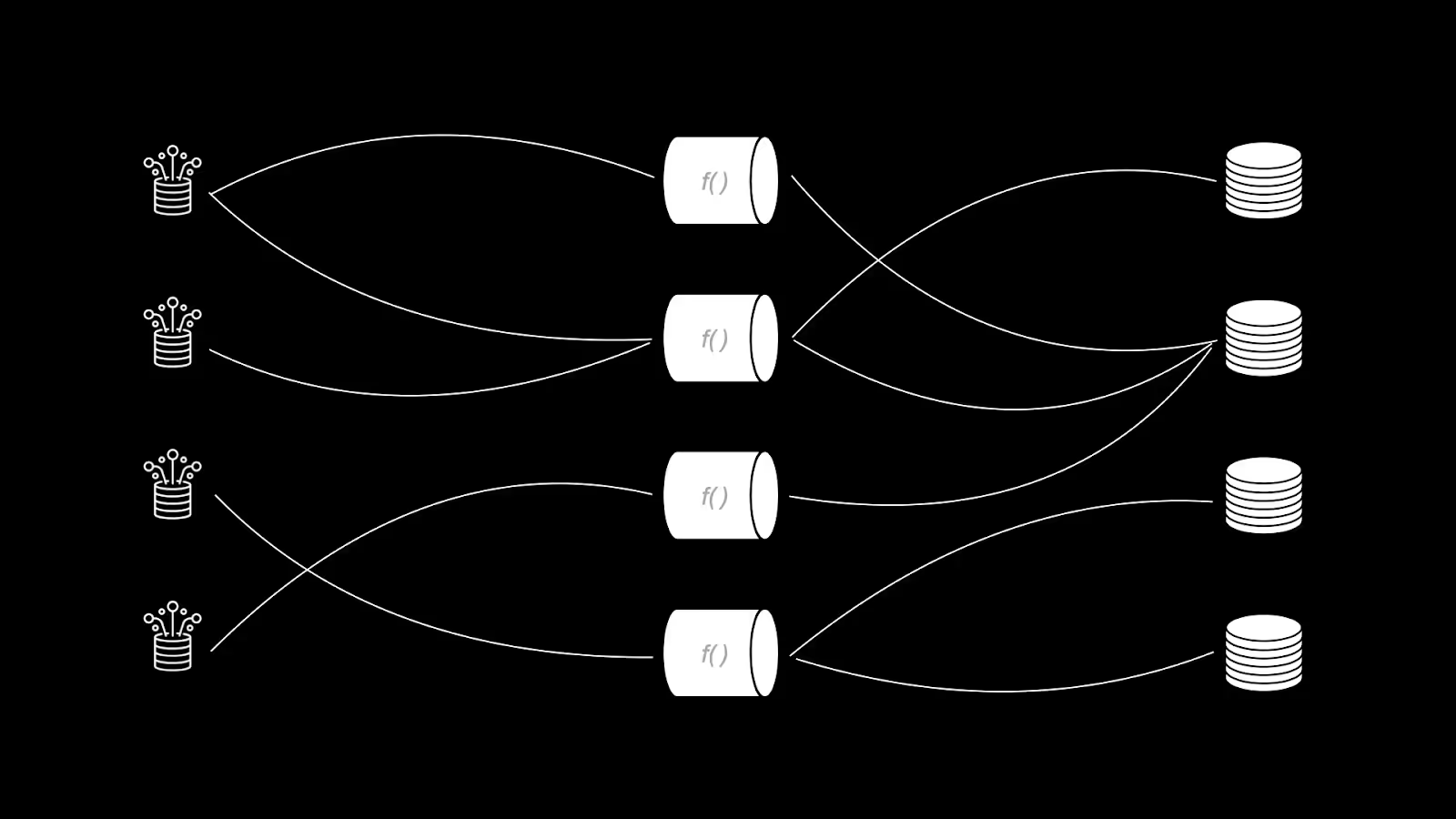

Multiple pipelines may be configured to consume data from a source(s), optionally transform the data and finally write it out to a sink(s). With multiple pipelines configured, data can be routed and transformed from many sources to multifarious sinks; just like a patch panel for data.

Intelligent Automation

Where the approaches taken by Grepr and Cribl differ is with their approach to Machine Learning (AI) and automation.

Cribl is similar to a large box of LEGO with copious specially shaped bricks with which you can build almost anything your heart desires; given enough time and effort. It consists of these main functional blocks:

Stream - The core data pipeline engine

Search - Query across data sources

Lake - Retain data in low cost storage

Edge - Observability data collector aka agent

Copilot - AI powered configuration helper

AppScope - BPF tracer

The functional reach is comprehensive, even crossing over with traditional observability platforms agent functionality. To achieve maximum efficiency with Cribl will require a team of dedicated administrators to set up and continually tune the profusion of components and their configurations.

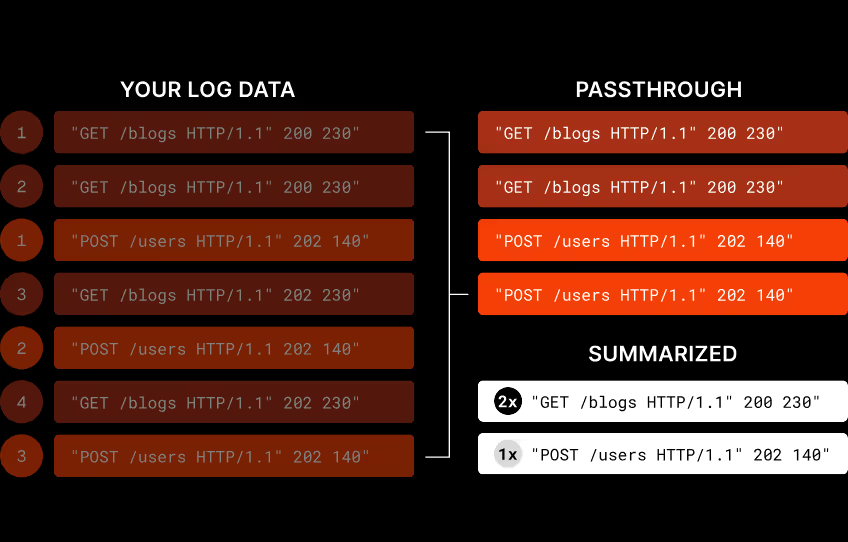

Grepr takes a more integrated approach and being fresh to the market leverages new technologies with Machine Learning (AI) and automation. All data sent to Grepr is automatically retained in low cost storage allowing you to keep more data for longer at reduced cost. Rather than being required to manually configure numerous pipeline rules to decide which data to forward to the sinks, Grepr uses Machine Learning. The AI automatically identifies noisy data and only sends periodic summaries while forwarding unique data straight through. One of Grepr’s larger customers typically has around 178,000 AI created pipeline filters; these filters have a TTL and are constantly adapting to the changing data. It would be impossible to replicate this with an entirely manual approach.

The intelligent automation of Grepr will reduce the quantity of data being passed through by 90% significantly saving on observability platform charges which typically charge by data volume. All this is achieved with minimal initial configuration and continues to be self-tuning as the data changes with time. Only when exceptions to the Machine Learning algorithm are required is manual configuration needed; it’s the exception rather than a requirement. Unlike Cribl which requires manual configuration at all times and does not automatically adapt to changes in the data streams.

Search

Both Grepr and Cribl provide the ability to search through the data that has been retained in the low cost storage. Cribl has their own Domain Specific Language (DSL) for building the queries which presents a learning curve for engineers. Grepr allows engineers to query the data using languages they are already familiar with: Datadog and Splunk with New Relic coming soon and more to follow. This completely eliminates the learning curve enabling engineers to be productive immediately.

Backfill

When an incident occurs engineers will require uncondensed data in their observability platform to investigate the issues. With Cribl they can search the data retained in low cost storage using Cribl DSL and optionally replay it through Cribl Stream. However, Cribl Stream will require configuration changes to ensure the data gets routed to the observability platform.

With Grepr engineers can search in a language they are already familiar with then optionally make that query a backfill job which will then route the search results directly to the observability platform. This workflow can be automated either via a webhook via a REST API call (such as from an anomaly detection system or from a support ticket) or by matching on anomalous messages.

Choices

Cribl is a very capable but highly complex solution which will require considerable resources for initial configuration and ongoing maintenance. Engineers will need to learn yet another query language and require ongoing support from the Cribl admin team to work effectively.

While Grepr does not have the breadth of integrations that Cribl offers, it does provide a friction-free solution for managing observability data. Its high level of automation and use of Machine Learning (AI) provides immediate and undemanding implementation. The support of familiar query languages enables engineers to be effective immediately.

If you are a large organisation with plenty of resources, the Cribl is a possible choice because of its ability to statically route data across a wide variety of sources and sinks. Alternatively Grepr requires substantially less resources to install and maintain providing a shorter time to value if you do not currently require extensive integrations.

More blog posts

All blog posts.png)

Grepr Recognized by Gartner as a Cool Vendor for AI Driven Operations

Using Grepr With Grafana Cloud